In version 24.2, ReportPortal offers 2x faster reporting under a 100% load: we’ve implemented a new asynchronous reporting approach. One of the key changes is the transition from a Direct exchange to a Consistent-hashing exchange. Using this exchange mechanism allows us to distribute messages more evenly and significantly boosts consumption efficiency from RabbitMQ by leveraging consistent hashing. Additionally, the retry mechanism was reworked to handle only messages related to the reporting order. Not related errors will be moved to parking lot queue without retries.

In the previous implementation, the number of Retry queues had to match the number of reporting queues. Now, we have simplified this by using a single Retry queue for all API Service replicas.

The number of Reporting queues remains the same as before, and you can still define the specific number of reporting queues during deployment. However, the number of Retry queues is simplified using a single Retry queue for all API Service replicas instead of matching the number to reporting queues.

Regarding the Dead letter queue, previously, messages lacked post-processing, causing them to stack indefinitely and consume a lot of space in RabbitMQ, requiring manual cleanup. In the new asynchronous reporting, we have implemented post-processing, where messages are automatically cleaned up after a defined time-to-live (TTL) timeout. This TTL can be flexibly configured during deployment and can vary for different workloads.

As for Autoscaling, the old asynchronous reporting mode did not support it because we had to hardcode values for the total number of queues in our deployment. Now, we have introduced Autoscaling.

We are glad to introduce the API service Autoscaling with the new asynchronous reporting implementation. Please refer to this documentation and this guide to read more technical details. The old asynchronous reporting did not support it as we had to declare the values for the total number of queues and per API queues in our deployment.

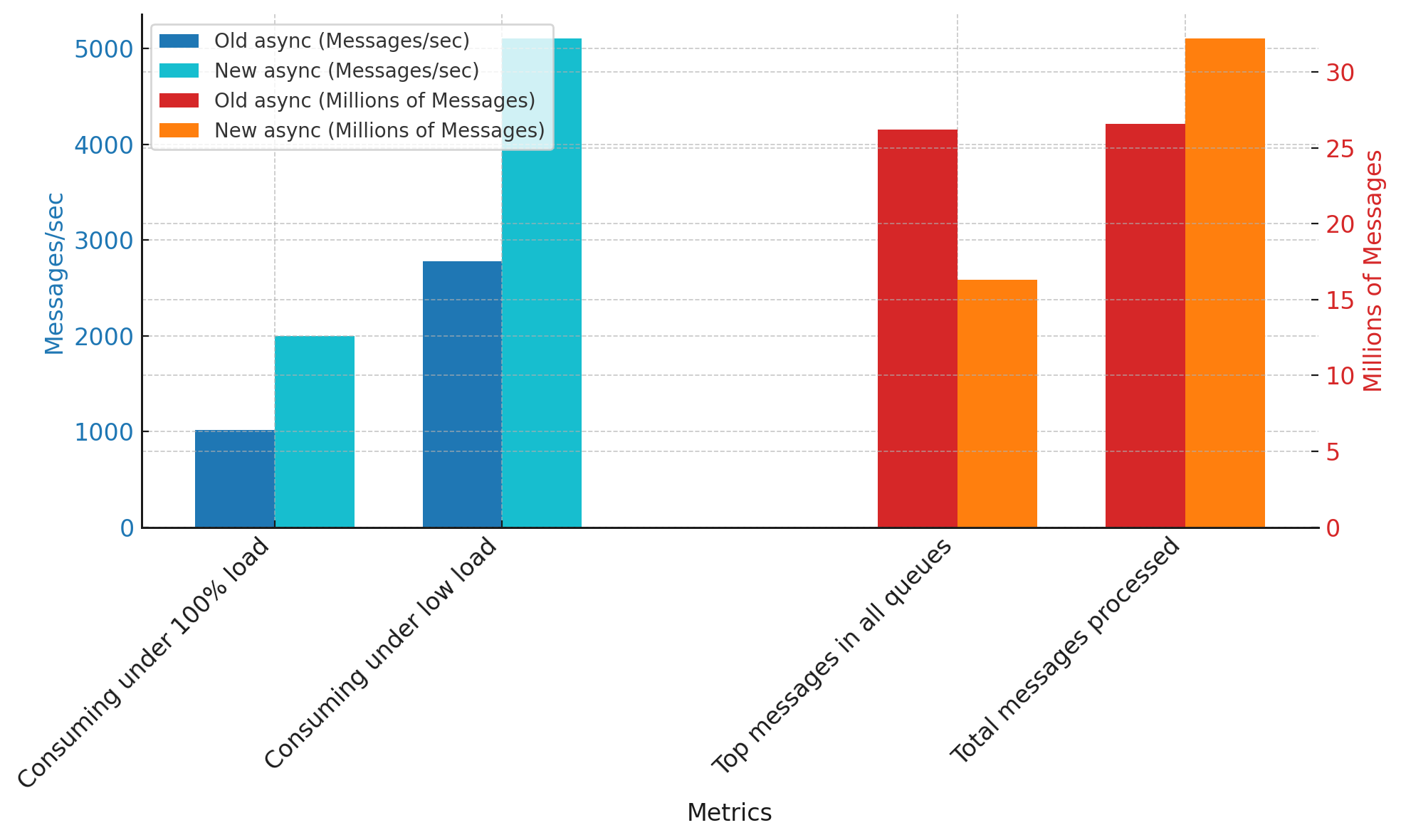

Let’s review the RabbitMQ metrics:

Consuming speed: we offer 2x faster reporting using asynchronous endpoints under a 100% load of ReportPortal compared to the previous implementation.

Total messages processed: performance tests show that we are processing almost 18% more messages within the same time-range compared to the old asynchronous mode.

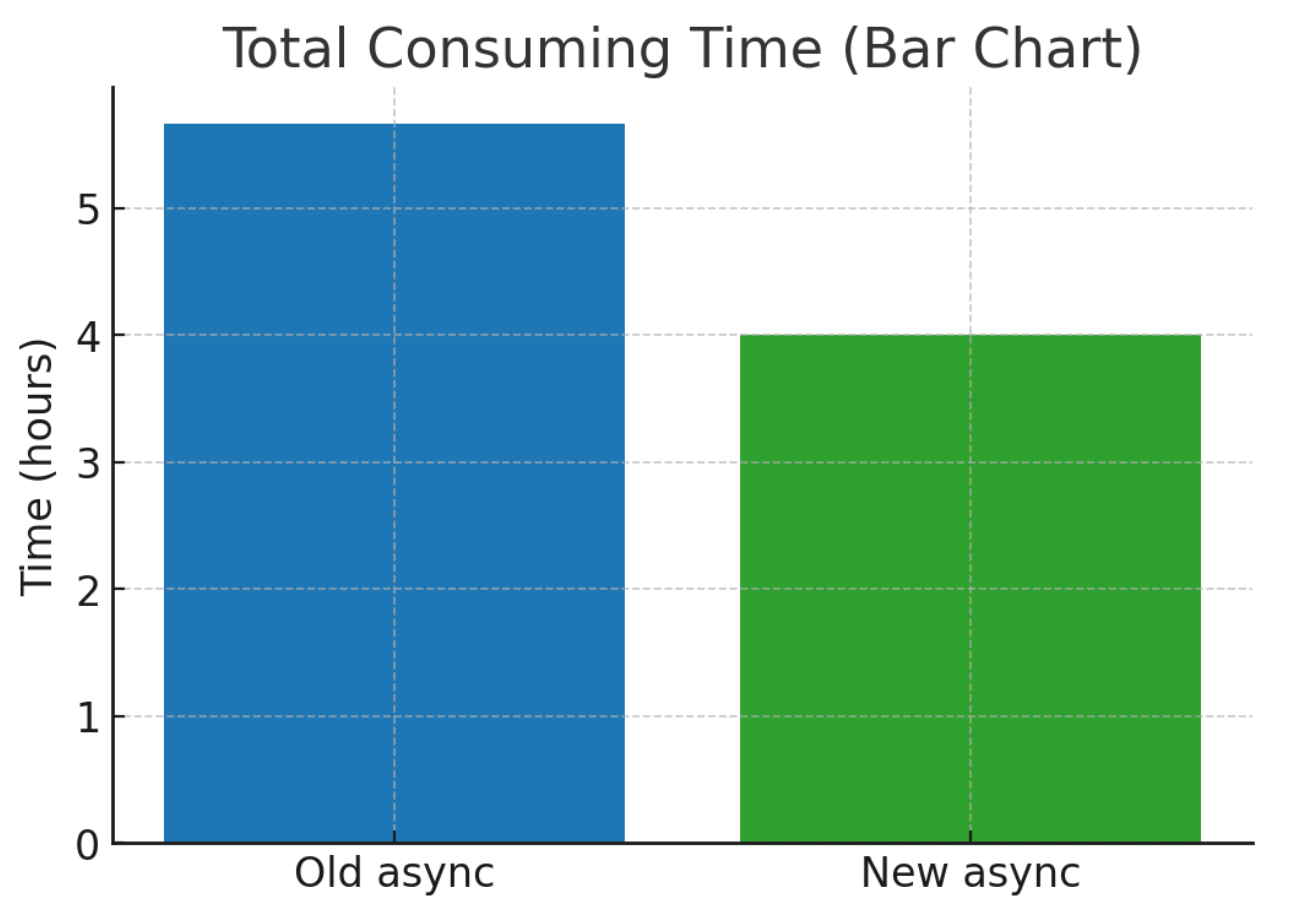

Total consuming time: with the new consistent-hashing exchanges, we need 30% less time to process over 73% more messages.

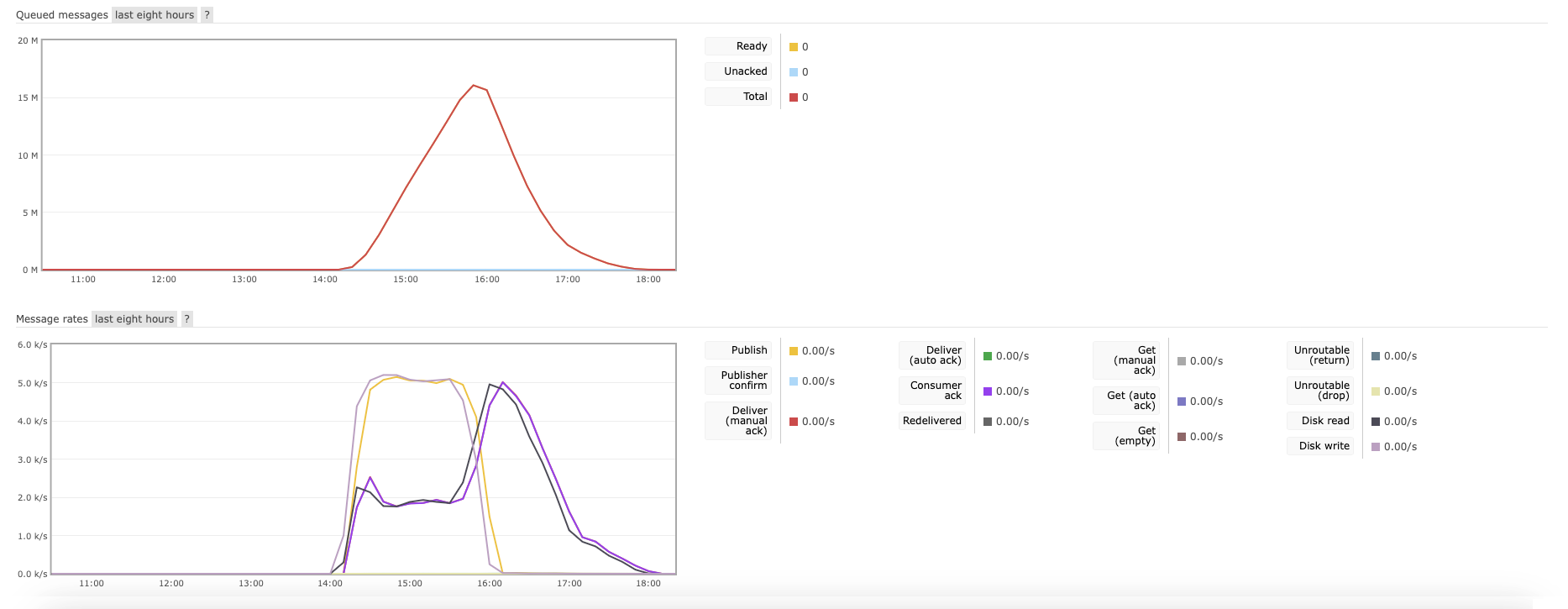

Let's explore how it performs in RabbitMQ:

The main achievement is that we now consume up to 2,000 messages per second under 100% load between 2 to 4 PM, which represents a 100% increase in speed.

Going forward, the streamlined queue structure, improved post-processing with configurable time-to-live settings, and the introduction of autoscaling collectively enhance ReportPortal's ability to handle higher loads more effectively.