The ReportPortal’s essence is based on assistance in working with automated testing results, and it all starts with the aggregation of results at a single place. For a long time, the relational database served well as a storage place for us. But the more test cases you run, the more storage you need to keep all related logs.

Before we introduced PostgreSQL with ReportPortal v5.0, we were using MongoDB from version 1 to version 4. MongoDB is a document-oriented non-relational database, was pretty good at writing the logs, but was a kind of nightmare to track metrics, build charts and find insights across testing results.

PostgreSQL resolved data joins for us and made implementing new features much easier and quicker. But it also brought some drawbacks: such as excessive disk space usage to keep and rotate logs, slowdowns on data inserting, high CPU loads on data deletion and constant required maintenance (VACUUM clean).

There are several reasons why PostgreSQL uses more disk space than the data itself, and it comes mainly from indexes of the data and several copies of the same data, due to specifics of PostgreSQL mechanics.

Logs are a big portion of data inside ReportPortal. And assuming specifics of the workflow associated with it, it should be constantly added and deleted, without much of the need to keep it for a long time. Even ReportPortal’s Machine Learning in Auto-Analysis feature will use it just several times for training and will store it transformed inside the ML model. But constant insertions and deletions will produce a significant load and innumerous storage footprint.

By reconsidering all pros and cons, we made a deep investigation and performance analysis of ElasticSearch vs PostgreSQL from the perspective of ReportPortal’s load model and nature of data. The results of this investigation go below in this article.

Assuming these results, our architectural decision was to switch logs storage from PostgreSQL to ElasticSearch, well… because we already have it as a part of our application. And since it gives us such benefits as reduced storage footprint up to 8.5x times, and data deletion up to 29x times quicker with just a fraction of the CPU load.

And also, it opens up the capabilities of the full-text search!

Please read our findings below.

Why we use ElasticSearch?

PostgreSQL was previously used as a database for log storage, but – according to the performance tests – this is not the most effective way. Log messages take up the most space in the database, so we decided to transfer them to ElasticSearch. Logs migration to ElasticSearch will significantly reduce storage occupied by the log table. It will improve overall database performance (timings and costs of infrastructure).

In version 24.2 ReportPortal will still rely on ElasticSearch v7.10, as the last available version under Apache 2.0 license. And going forward we will consider Elastic-like solutions and forks, but this will come into play after a series of performance analyses and investigations. So please stay tuned, we know that it could be an issue for some of our users, but in order to avoid hasty decisions we have to make it incrementally.

Due to the licensing policy chosen by Elastic, this has impacted the ability of some enterprise companies to use their solutions, including ElasticSearch. For some companies, this has become a turnover factor. Considering that it's in our interest to allow our clients to universally use ReportPortal without being restricted by the licenses of our underlying components, we are currently exploring alternative options.

What would you get with the ReportPortal v24.2 and the switch to the ElasticSearch logging?

It’s not something that you will see at the first moment, but definitely will benefit you in the long run, with outcomes like:

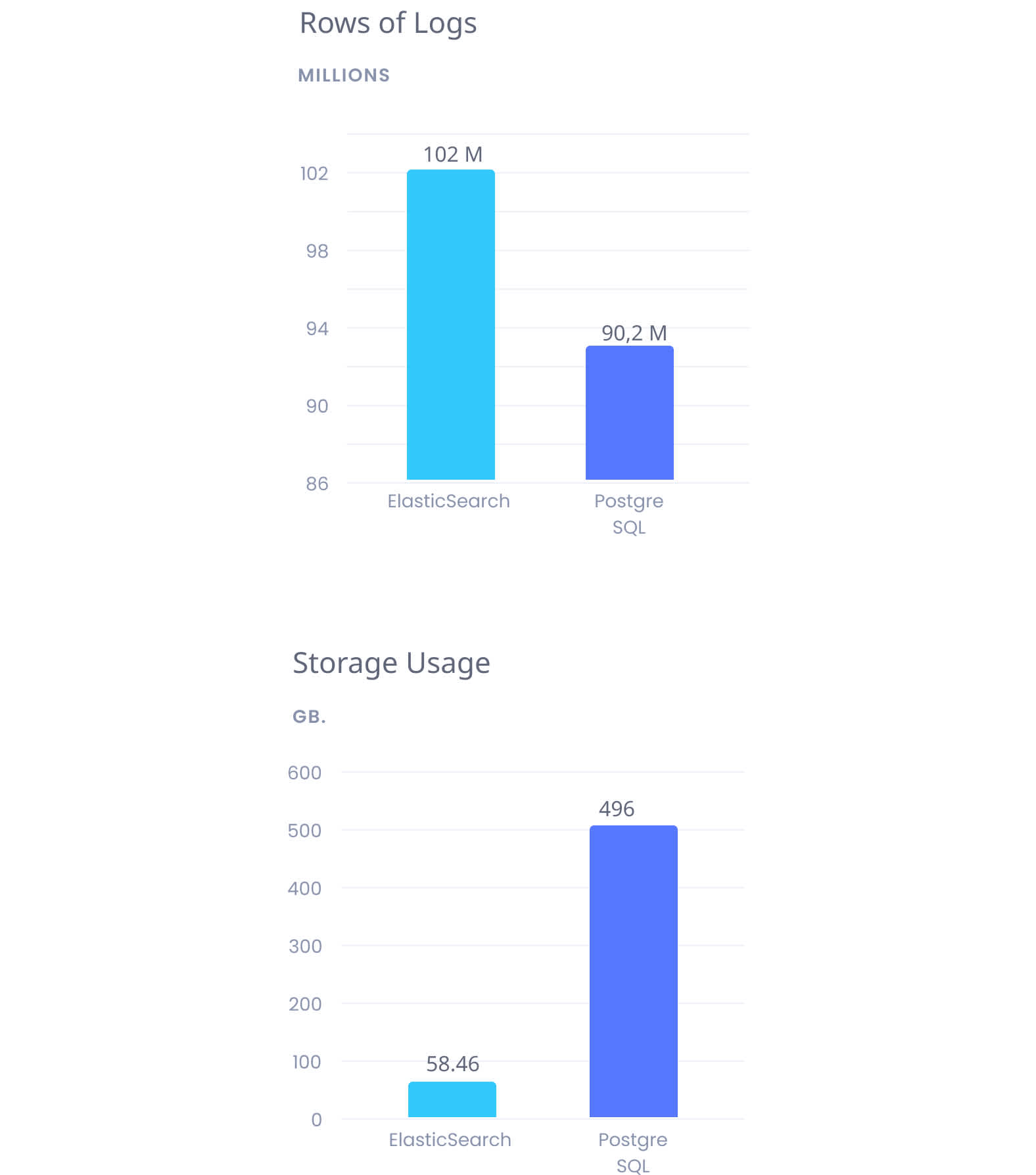

Reduced disk space usage, with a smaller footprint up to x8.5.

Reduced maintenance of the PostgreSQL database, and reduced requirements for the shape sizes by at least x2 times.

Reduction of database load used by pattern analysis up to 5x times.

Full-text search capabilities for text logs (x33 times quicker for text queries, and less CPU utilization 1 – 16x times in comparison with PostgreSQL).

Similar performance with PostgreSQL on getting logs by ID.

Storing logs in different indices per project allows to get project data faster and reduces the risks of locks occurrence.

ElasticSearch is lower by storage up to 8.5x times.

Why we use Data Streams?

Elasticsearch provides a special approach for storing log data: “A data stream lets you store append-only time series data across multiple indices while giving you a single named resource for requests. Data Streams are well-suited for logs, events, metrics, and other continuously generated data,” – described in the official elastic search documentation.

Data Streams benefits

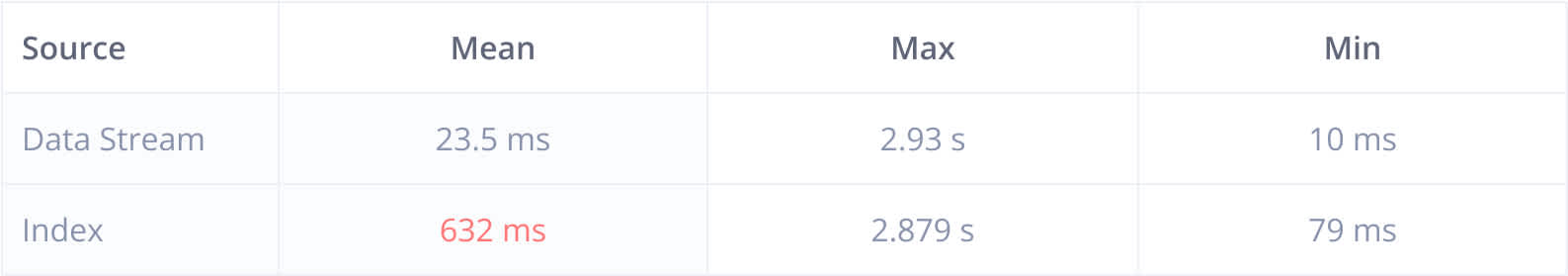

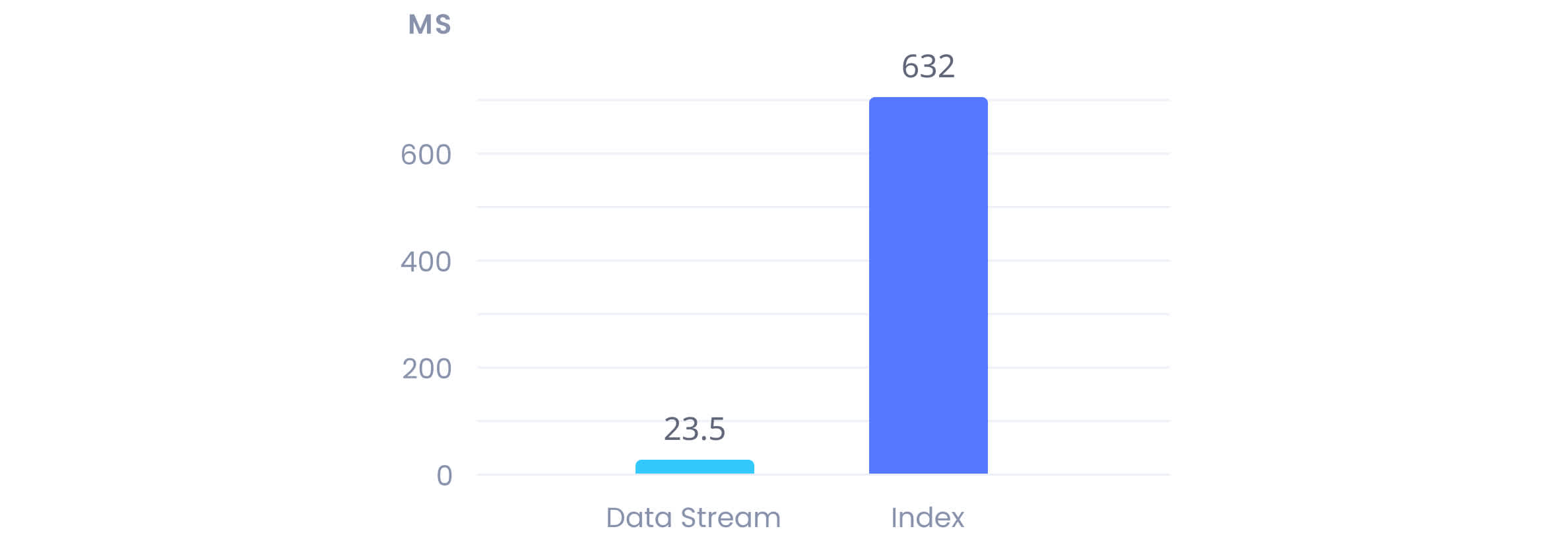

Logs deletion by IDs is x29 times faster in data streams compared to Indices;

Fast logs insertion (reporting) at the time of the high workload;

Creation of cheap data nodes for old data, e.g., HDD with low resources. ElasticSearch allows configuring the old data storage using ILM (Index Lifetime Management) policy. It might be useful, for example, if your project uses some information once per week/month, etc;

Various index rollover conditions – fast creation of the new generation. It means that a new generation of this data stream is created when the limit is reached (by logs count, by logs amount, by date). So, logs of this data stream proceed to the new generation. Limits can be specified in the IML policy per project;

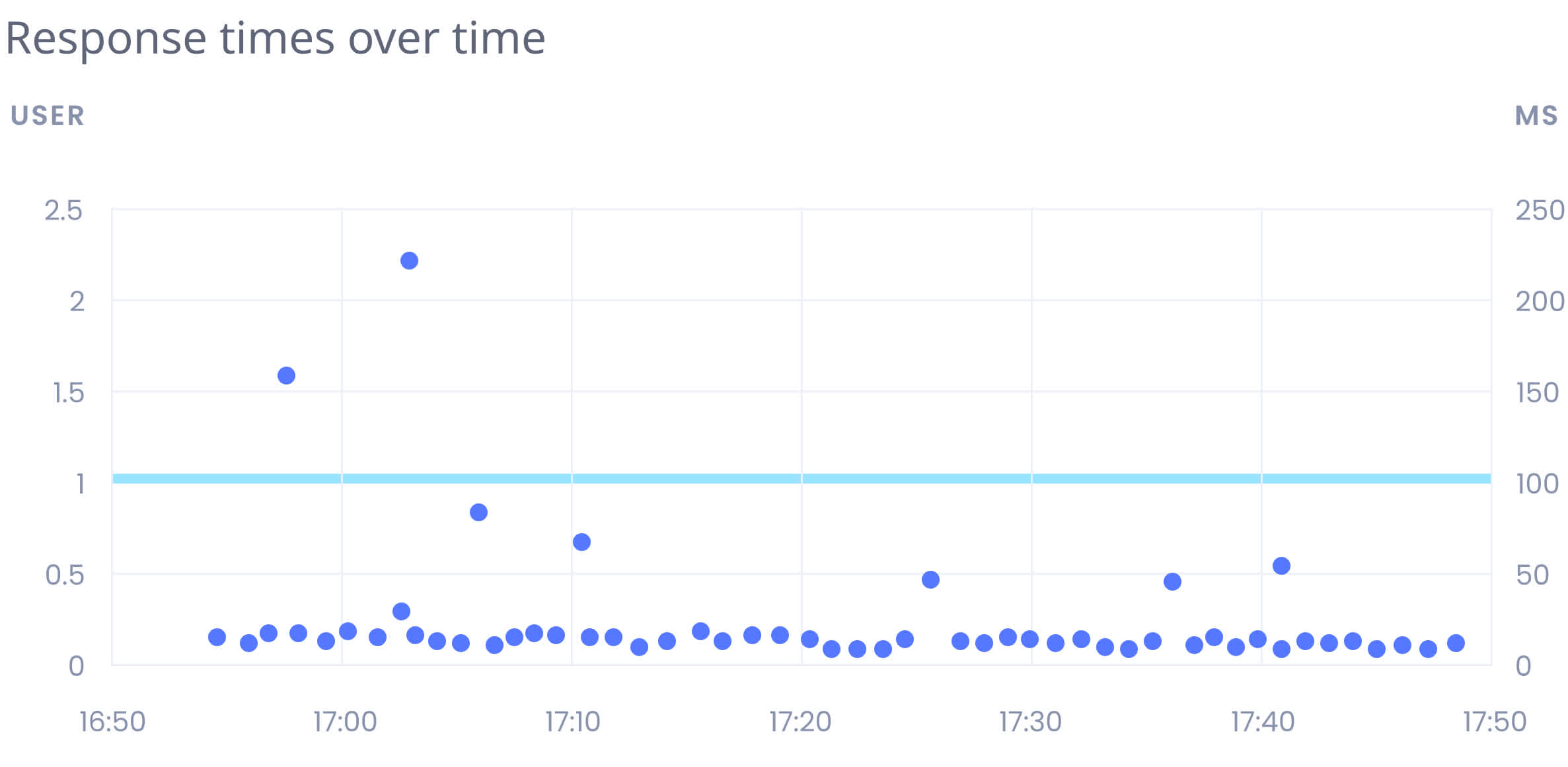

Response times table (95pct)

Deletion by IDs performance comparison

29 times faster in comparison with index, logs deletion by IDs from data streamers

29 times faster in comparison with index, logs deletion by IDs from data streamers here.

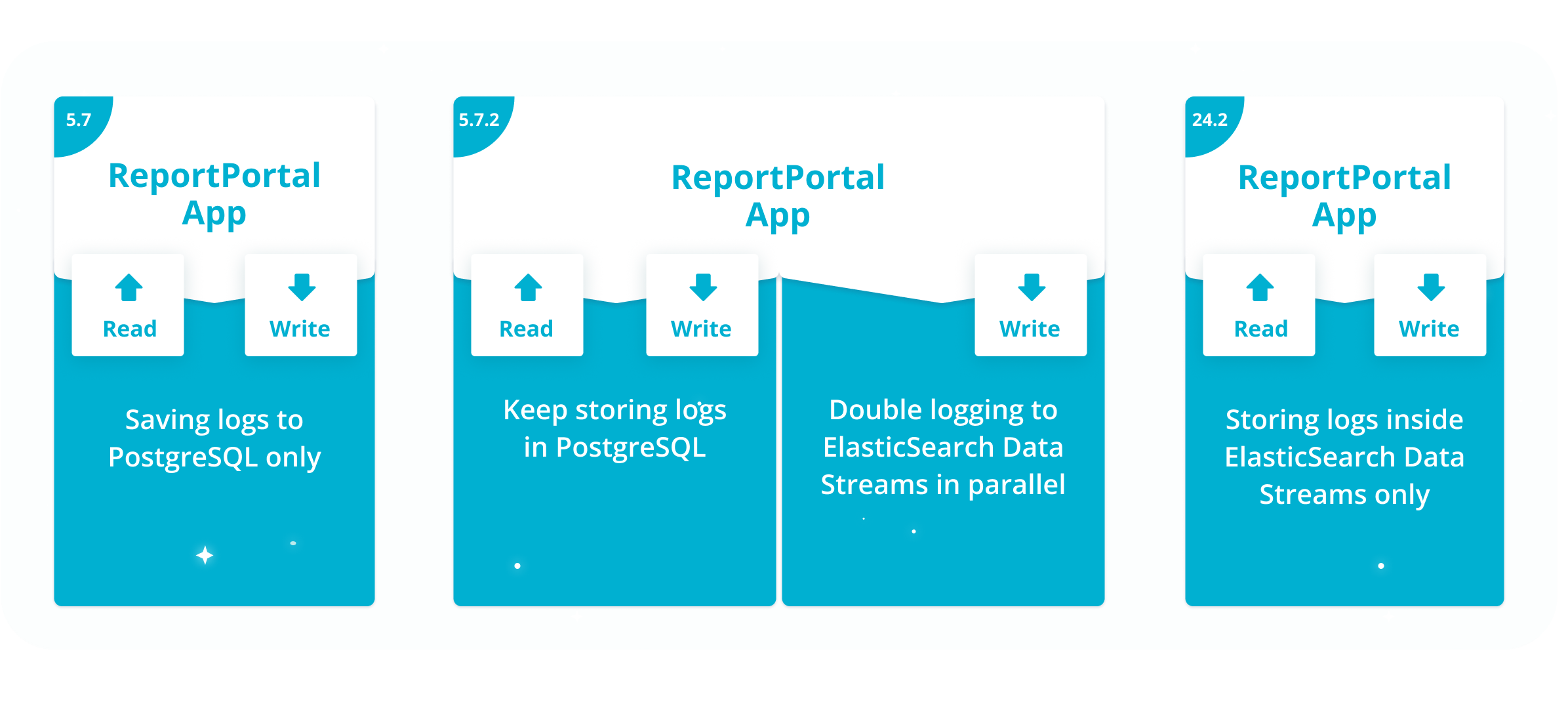

What effort is required from users?

We recommend updating to version 5.7.2 for a smooth transition of full logging to ElasticSearch, especially if you have many logs. If you update to version 5.7.2, use it for 3-4 months before version 24.2. This period will be enough for the vast majority of projects to generate enough logs history inside ElasticSearch. And then update to version 24.2 once it is available. Since all logs will already be stored in ElasticSearch, no efforts will be required to do the migration. Along with version 24.2 we will distribute a migration script and instructions for data migration so that you can easily migrate from the early 5.x version.

Note 1

Before version 24.2, double logging might increase the resources usage – CPU, disk space.

Note 2

We are already using the ElasticSearch license, so, no new license is required. For now, we stay on version 7.10 with Apache 2.0. We might switch to OpenSearch in prospect.

How to enable or disable double entry?

If you want to enable double entry, perform the following steps:

1. Add ElasticSearch to your deployment (if you use Docker, you can check how to add it here).

2. Add this variable to service-api and service-jobs:

RP_ELASTICSEARCH_HOST=<your-host>3. If you have authorization in your ElasticSearch, you also need to add these variables:

RP_ELASTICSEARCH_USERNAME=<your-elastic-username>

RP_ELASTICSEARCH_PASSWORD=<your-elastic-password>If you want to disable double entry, then you should remove all these variables from these services.

To summarize, using of ElasticSearch and Data Streams will bring significant performance benefits in the future.