Timely and reliable reporting is essential for maintaining visibility, traceability, and quality control. However, teams may occasionally experience a launches delay in reporting, where the time between test execution and data visibility in the ReportPortal interface becomes unexpectedly long. These delays can undermine rapid feedback loops, disrupt CI/CD workflows, and complicate post-launch analysis. This article outlines the most common reasons for delays in reporting launches to ReportPortal and provides practical solutions to help you identify, mitigate, and prevent them.

The potential causes of launches delay in reporting:

1. Queue processing delays 2. Launch finish request timing 3. Reporting under a finished launch 4. Infrastructure bottlenecks 5. High volume of data 6. Misconfigured reporting logic 7. Rerun launches 8. Auto-Analysis and Quality Gates

In the sections that follow, we’ll explore each of these issues in detail – along with practical solutions to help you address and prevent delays in launch reporting.

1. Queue processing delays

Cause: ReportPortal uses an asynchronous queue mechanism to handle reporting events. If the queue is overloaded, it can lead to delays.

Solution:

Monitor the queue size and processing time.

Scale up the infrastructure by increasing the number of consumers or processing nodes.

Optimize reporting logic to reduce unnecessary requests.

Note: Use RabbitMQ management UI for these metrics that should be available by default on http://<host_name>:15672.

2. Launch finish request timing

Cause: If the launch finish request is not the last in the queue, the launch will still be marked as finished, but subsequent requests will be processed later. This can delay updating the launch statistics.

Solution:

Ensure all test items are reported before sending the launch finish request.

Use proper synchronization mechanisms in your test framework to avoid premature launch closure.

3. Reporting under a finished launch

Cause: Items reported after a launch is marked as finished will not be included in post-launch processes like Auto-Analysis or Quality Gates.

Solution:

Avoid reporting items under a finished launch.

Ensure the launch remains in the "IN PROGRESS" state until all items are reported.

4. Infrastructure bottlenecks

Cause: Insufficient resources in the ReportPortal infrastructure, such as database performance, memory, or CPU, can cause delays.

Solution:

Optimize database performance (e.g., indexing, query optimization).

Allocate more resources (CPU, memory) to the ReportPortal server.

Reduce network latency by deploying ReportPortal closer to the test execution environment.

5. High volume of data

Cause: Reporting many test items or logs simultaneously can overwhelm the system.

Note: Depending on the instance shape, there is a limit on the number of parallel HTTP requests per second.

Solution:

Distribute tests across multiple launches.

Monitor CPU/RAM usage

Batch the reporting of test items to avoid overloading the system.

Use log-level filtering to reduce the volume of logs sent to ReportPortal.

If your tests use DEBUG-level logging, it can put significant load on the API and/or the API plus RabbitMQ when using asynchronous reporting. As a result, the Reporting API response time may increase noticeably. To avoid this, monitor the logging level in your tests and disable DEBUG unless necessary. Doing so can significantly improve reporting performance.

6. Misconfigured reporting logic

Cause: Incorrect implementation of the reporting logic in the test framework can lead to delays.

Solution:

Review the integration of your test framework with ReportPortal.

Ensure the reporting logic adheres to the ReportPortal API guidelines.

7. Rerun launches

Cause: When rerunning a launch, the "rerun"=true parameter must be included in the request body. Missing this can lead to delays or incorrect reporting.

Solution:

Use the correct API endpoint and include the "rerun"=true parameter for rerun launches.

8. Auto-Analysis and Quality Gates

Cause: Post-launch processes like Auto-Analysis and Quality Gates can take time to execute, especially for large launches.

Solution:

Optimize the configuration of Auto-Analysis and Quality Gates.

Schedule these processes during off-peak hours to reduce delays.

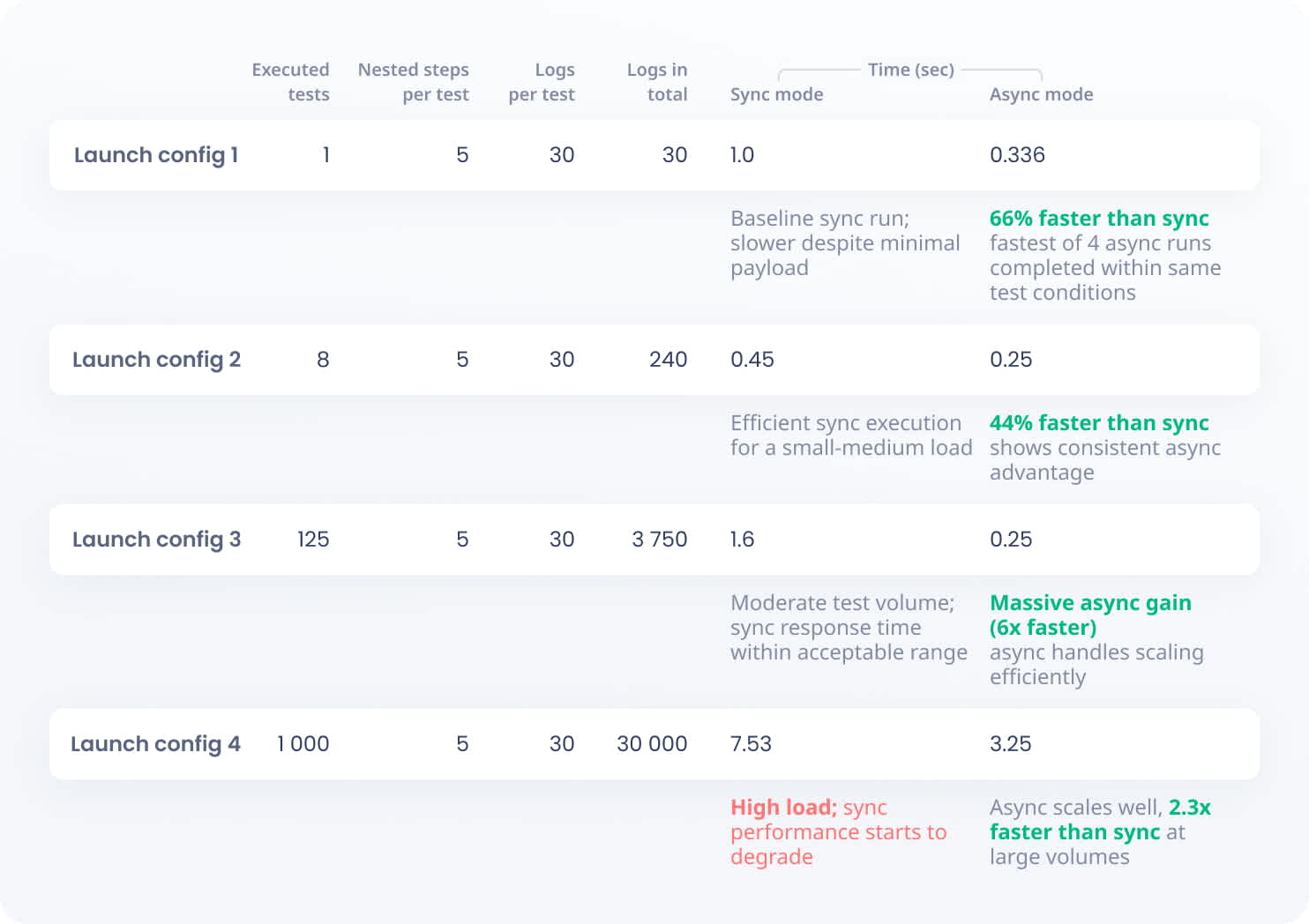

Reporting API response time by launch configuration and mode

Thus, if you encounter delays when reporting launches to ReportPortal, first, use the following steps to diagnose and resolve the issue:

Check the launch state: Ensure the launch is in the "IN PROGRESS" state before reporting items.

Performance monitoring: Use performance metrics such as CPU and RAM usage to make sure that you have enough computation power.

Review logs: Analyze the logs of both the test framework and ReportPortal for errors or warnings.

Test the API: Use tools like Postman to verify API behavior and measure response times.

These steps can help you systematically narrow down the source of reporting delays and verify whether the issue lies in infrastructure, reporting logic, or API configuration.